In a world that is becoming increasingly interconnected, the importance of effective communication cannot be overstated. However, for the millions of individuals in the deaf and hard of hearing community, traditional communication methods often fall short, creating barriers that hinder their full participation in various aspects of life.

The beauty and complexity of sign language, a primary mode of communication for many in this community, have long posed a challenge for seamless interaction with the broader society.

Artificial Intelligence (AI), which has been making waves, is stepping in to address this hurdle. Recently, AI has shown promise in making sign language translation more accessible for the Deaf community. This combination of advanced technology and expressive language not only makes communication easier but also aligns with the growing idea that businesses and communities should be more inclusive.

In this blog, we'll uncover why sign language is so important, highlight the challenges faced by the deaf community, and see how AI is making a big difference in translating sign language.

The Importance of Sign Language

Communication is like a thread that weaves us all together, allowing us to share thoughts, emotions, and ideas. But what happens when that thread is frayed for some, particularly for the deaf and hearing impaired community? This is where sign language comes in as a nuanced mode of expression, offering a unique way for individuals to connect and communicate.

Sign Language: More Than Hand Movements

Sign language is not just waving hands around; it's a language that embraces movements, facial expressions, and gestures. Imagine a conversation where every raised eyebrow, every subtle smile, and every twist of the wrist convey a meaning far beyond words.

Contrary to common belief, sign language is not just a simplified version of spoken language. It has its own grammar, syntax, and rules. It's a complete and rich language that can express complex thoughts and emotions. From the graceful flow of fingers to the intensity of facial expressions, sign language paints a vivid picture of communication.

Report shows that globally, approximately 70 million people use sign language as their primary means of communication. These individuals navigate a world that isn't always attuned to their language, facing daily hurdles in education, work, and social interactions.

These challenges are not just inconveniences; they are barriers that hinder full participation in society. Let's take an example of an individual trying to understand a lecture without an interpreter, or going to a medical appointment without clear communication. These are the everyday hurdles that make accessibility and inclusivity crucial for fostering a truly connected and understanding world.

This stark reality highlights the pressing need for innovative solutions that can bridge these communication gaps and empower a broader segment of the population.

Challenges Faced by the Deaf Community

Sign language is vital for the Deaf and Hard of Hearing community, however, they encounter several challenges, impacting different aspects of their lives.

A. Everyday Communication Hurdles

Deaf individuals often face difficulties in routine situations such as ordering food or participating in meetings. The lack of understanding or awareness from those unfamiliar with sign language exacerbates these challenges, leading to feelings of isolation.

B. Limited Access to Information

Accessing information becomes a challenge as traditional methods like written text or spoken language may not always be practical. In education, for example, where spoken words dominate lectures, effective learning can be compromised. Additionally, accessing timely news or announcements can be problematic, hindering their ability to stay informed.

C. Employment Challenges

Securing employment can be challenging for Deaf individuals due to the reliance on spoken language in job interviews and workplace communication. This not only affects their economic well-being but also contributes to the broader issue of underrepresentation in various industries, limiting career growth.

D. Healthcare Disparities

Navigating healthcare systems can be tricky due to potential miscommunication. In medical settings, the inability to convey nuanced information about symptoms or understand medical advice emphasizes the need for better communication channels. This can lead to disparities in healthcare access and outcomes.

E. Technological Gaps

Advancements in technology bring both opportunities and challenges. Deaf individuals may face barriers in using everyday technologies that do not consider their communication needs. For example, video content without proper captions or subtitles can be a barrier to accessing information online. This also include limitations in video conferencing tools, mobile applications, and online platforms, affecting their seamless integration into the digital landscape.

F. Accessibility to Emergency Services

During emergencies or natural disasters, communication becomes critical for everyone's safety. Deaf individuals face challenges in receiving timely and accurate information, as traditional emergency communication methods may not cater to their needs.

Addressing these multifaceted challenges is essential for enhancing the overall quality of life for the deaf community.

How AI technologies are Being Utilized for Sign Language Translation

AI technologies are playing a significant role in advancing sign language translation, making communication more accessible for the deaf and hard-of-hearing communities. Here are some ways AI is being utilized in this context:

1. Computer Vision and Gesture Recognition

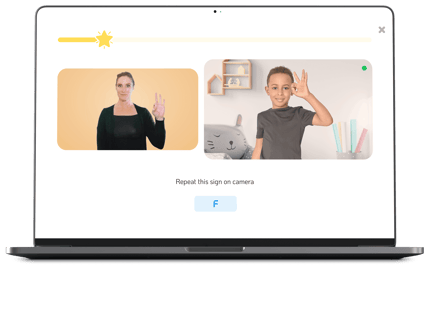

Camera-Based Systems: In computer vision-based sign language translation, cameras capture video input of sign language gestures. Advanced algorithms analyze this visual data, focusing on key elements such as hand movements, facial expressions, and body language. This allows the system to decipher the intricate details of sign language, providing a more comprehensive understanding of the communication.

Image source: SLAIT.AI

2. Machine Learning and Deep Learning

Gesture Recognition Models: Deep learning models, particularly convolutional neural networks (CNNs) and recurrent neural networks (RNNs), are employed for gesture recognition. These models are trained on extensive datasets containing diverse sign language gestures. The deep learning architecture enables the system to discern complex patterns, improving the accuracy and adaptability of sign language translation.

Apps like "SignAll" use computer vision to interpret sign language gestures captured by cameras. Users can communicate using sign language, and the app translates their gestures into written or spoken language, enabling seamless interaction.

ALSO READ: Understanding Machine Learning (ML) Models and their Training Methods

3. Data Gloves and Wearable Devices

Sensor-Based Technologies: Wearable devices equipped with sensors, such as data gloves, capture not only hand movements but also the orientation and dynamics of gestures. The precise data collected enhances the fidelity of sign language translation. These devices often utilize accelerometers and gyroscopes to track the intricate motions, providing a more nuanced interpretation of sign language.

4. Natural Language Processing (NLP)

Sign Language to Text Translation: NLP algorithms in sign language translation systems must understand the grammar, syntax, and linguistic nuances of sign languages. They convert the visual information into coherent and contextually relevant written text. This involves mapping sign language elements to linguistic structures, ensuring accurate and meaningful translations.

5. Interactive Avatars and Virtual Characters

Avatar-Based Communication: AI-driven avatars or virtual characters are designed to replicate not only the physical gestures but also the expressiveness of sign language users. Advanced animation techniques and algorithms simulate the natural flow of sign language, enhancing the user experience. These avatars contribute to a more immersive and interactive communication environment.

"Hand Talk", a Brazilian startup, pioneering accessibility through its innovative app. Leveraging artificial intelligence, the Hand Talk app translates spoken or written language into Brazilian Sign Language (Libras) using an animated character named Hugo. This breakthrough technology fosters inclusive communication, bridging gaps for deaf individuals.

6. Mobile Apps and Accessibility Tools

Real-Time Translation Apps: Mobile applications leverage the processing power of smartphones to perform real-time sign language translation. The integration of AI algorithms within these apps enables users to point their devices at sign language gestures, receiving instantaneous translations in the form of text or spoken words. This on-the-go accessibility enhances communication for deaf or hard-of-hearing individuals in various settings.

7. Sign Language Databases

Training Datasets: The development of accurate sign language translation models relies on comprehensive training datasets. These datasets comprise diverse sign language expressions, covering various regional and cultural differences. Continuous expansion and curation of these databases are crucial for improving the model's ability to recognize and interpret a wide range of sign language gestures.

8. Integration with Communication Devices

Smart Assistants and Devices: AI-powered sign language translation can be seamlessly integrated into smart devices and virtual assistants. This integration allows individuals to communicate using sign language through voice commands or visual inputs. The adaptability of AI models to different communication interfaces enhances the accessibility of information and services for users with diverse needs.

ALSO READ: How Voice-Enabled Solutions are Amplifying the Customer Experience

Ethical Considerations

When developing an AI-driven digital platform, privacy is a primary concern. The potential invasion of personal space through camera use raises valid worries about data collection. Another crucial aspect is cultural sensitivity, as biases in the technology must be addressed to accurately represent the diverse signing styles and speeds within the Deaf community.

By adhering to these ethical considerations, we not only uphold principles of fairness but also fortify the credibility of AI-driven sign language translation. The objective is to create a communication platform that is not only trustworthy but also genuinely inclusive for all.

Building Inclusive AI Solutions for Businesses

In wrapping up, the fusion of AI with sign language translation is making a big difference in making communication more accessible. We've seen how technology can break down the barriers that often isolate the deaf and hard of hearing community, creating a more inclusive society.

Looking ahead, there's a lot of promise for AI in sign language translation. Ongoing teamwork and exploration could change not just how we communicate but open up new chances for education, jobs, and being part of the community.

Interested in tailored AI solutions? Contact us to discuss how our AI development services can meet your specific needs.