The growing use of artificial intelligence(AI) in sensitive domains such as healthcare, hiring, and criminal justice has sparked a debate about fairness and bias. However, it's worth noting that human decision-making in these areas is also susceptible to biases, which can be influenced by societal norms and unconscious beliefs.

The question is, will AI systems make less biased decisions than humans, or will they amplify and perpetuate these biases? This is a critical issue that needs to be addressed, as it has the potential to impact many individuals and communities.

In this blog, we'll provide an ultimate guide on how to address bias in AI. We'll explore the different types of bias that can exist in AI systems, the underlying causes of bias, and techniques for preventing and mitigating it.

What is AI bias?

AI bias is the phenomenon of machine learning algorithms producing prejudiced results that are unfair or discriminatory towards certain individuals or groups based on factors like race, gender, age, or socioeconomic status. This can happen when the algorithms are trained on biased data or when the developers make biased assumptions during the algorithm development process.

Types of AI bias

1. Data Bias: Data bias occurs when the data used to train an AI system is not representative of the population it is meant to serve. This can result in the AI system making biased decisions or recommendations that disadvantage certain groups of people. For example, a facial recognition algorithm trained on data sets that contain more white faces than other races may lead to incorrect or biased results when used on individuals of different races.

2. Algorithmic Bias: Algorithmic bias occurs when the design or implementation of a machine learning algorithm produces biased results. This can happen due to various factors, such as the selection of training data, the choice of features, or the use of biased models.

For example, a company uses a machine learning algorithm to screen job applicants by analyzing their resumes. The algorithm is trained on previous hiring data and looks for patterns in the resumes of successful candidates. However, if the training data is biased towards a certain group (e.g., white men), the algorithm may learn to favor candidates who fit that group's characteristics, even if they are not the most qualified. This can result in qualified candidates from underrepresented groups being unfairly screened out.

3. User Bias: User bias occurs when users of AI systems exhibit bias, intentionally or unintentionally, in the input data they provide to the system. This can happen, for example, when users enter discriminatory or inaccurate data that reinforce existing biases in the system.

4. Technical Bias: Technical bias occurs when the hardware or software used to develop or deploy AI systems introduces bias into the system. For instance, a machine learning system that is trained on a limited dataset due to technical limitations, such as a lack of computing power or storage capacity. This can lead to the algorithm being less accurate or producing biased results due to a lack of exposure to a wider range of data.

READ MORE: Real-world examples of AI bias

Techniques for Mitigating AI Bias

To ensure the impartiality of AI systems, it is essential to use a range of techniques at different stages of the process. These techniques include pre-processing, algorithmic, and post-processing methods. By incorporating these approaches, we can help mitigate bias in AI, promoting a more inclusive environment for everyone.

1. pre-processing techniques: Pre-processing techniques involve transforming the input data before it is fed into the machine learning algorithm. These techniques can help mitigate bias by creating a more diverse and representative dataset. Some pre-processing techniques include:

- Data Augmentation: This technique generates new data points to increase the representation of underrepresented groups in the dataset. For instance, if the dataset contains fewer samples of a particular demographic group, data augmentation can be used to increase its size and diversity.

- Balancing and Sampling: These techniques are commonly used to ensure that a dataset is representative of all groups in proportion. This is accomplished by utilizing methods such as oversampling and undersampling. When applied effectively, it can help reduce bias and improve model accuracy.

2. Algorithmic techniques: Adjusting the machine learning algorithm itself is a strategy for mitigating bias, which can be accomplished through algorithmic techniques. Such techniques include:

- Regularization: Regularization is a technique that can help prevent overfitting of the training data by adding a penalty term to the loss function of the algorithm. This approach can help reduce bias and improve the accuracy of the model's predictions in real-world scenarios.

- Adversarial Training: This technique involves exposing the model to adversarial examples that are designed to deceive the model into making incorrect predictions. By training the model to recognize and adapt to these types of examples, it can become more robust and less susceptible to bias.

- Fairness Constraints: This involves imposing constraints on the model's optimization process to ensure that it produces fair outcomes for all groups. This approach can help mitigate the effects of bias and discrimination in the model's decision-making process, promoting equity and inclusivity in the business setting.

3. Post-processing techniques: Post-processing techniques aim to detect and remove bias from machine learning algorithms by analyzing their outputs after training. Some examples of such techniques are:

- Bias Metrics: This technique involves measuring the degree of bias in the model's predictions using quantitative metrics such as equalized odds and equal opportunity. These metrics can be used to identify and correct biases in the model.

- Explainability: This technique involves making the model's predictions more interpretable by providing explanations, such as highlighting the features that were most important in the decision-making process. This can help to increase transparency and accountability.

- Fairness Testing: This technique involves testing the model for fairness using various testing methods such as statistical parity, disparate impact analysis, and individual fairness. This can help to identify any bias that may have been missed during the training phase.

How to Tackle Bias in AI?

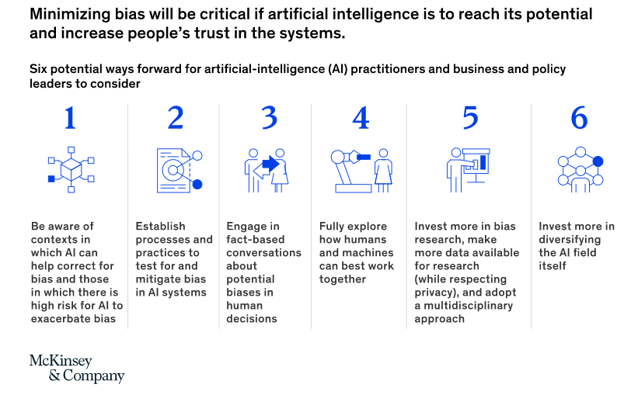

When it comes to tackling AI bias, it's important to understand that it's a complex issue that requires a thoughtful approach. It's crucial to acknowledge that AI bias is rooted in human biases, so removing those biases from the data set should be a priority. However, it's not always as simple as deleting labels or removing protected classes. In fact, taking these steps could actually hurt the accuracy of the model.

To minimize AI bias, it's necessary to follow best practices recommended by experts in the field. By taking these measures, we can reduce the likelihood of perpetuating existing biases and ensure that our AI systems are as fair and inclusive as possible.

So, let’s dive into it —

1. Accept the existence of bias: The first step in addressing bias in AI is to acknowledge its existence. This involves recognizing that AI systems can be biased and can have serious consequences.

2. Build a diverse and inclusive team: To ensure that AI systems are built with fairness and inclusivity in mind, it's important to have a diverse team of experts working on their development. This team should include people with different backgrounds, experiences, and perspectives which can help you identify potential sources of bias in your AI systems that you may not have considered otherwise.

3. Identify potential biases: The next step is to identify potential biases in the data, algorithms, and decision-making processes used by AI systems. This can be done through a variety of techniques, such as data auditing and algorithmic auditing.

4. Establish an ethical framework: Develop an ethical framework for your AI system that outlines the values and principles that should guide the use of the technology. This can help you identify and address ethical concerns and ensure that the system is aligned with your company's ethical standards.

5. Test your AI systems: Testing your AI systems on diverse datasets can help you identify any biases and ensure that your systems are fair and equitable for all. By testing your systems on data that is representative of the people who will be impacted by your systems, you can identify any biases and work to correct them.

6. Promote transparency: To build trust in AI systems, it's important to promote transparency. This means being open about the data used, the algorithms used, and the decision-making processes involved.

7. Involve stakeholders: Involving stakeholders in the development process can help build trust and allow for easy identification and correction of any bias. By involving stakeholders in the development process, you can ensure that their needs and concerns are taken into account when designing your AI systems.

8. Conduct regular audits: AI models are not static and can change over time, so it's important to monitor your AI systems for bias on an ongoing basis. This can help you identify any new biases that may emerge as your systems evolve, and ensure that your systems are making fair and accurate decisions for all

9. Continuously Learn and Adapt: Finally, it's important to recognize that addressing bias in AI is an ongoing process. As new biases are identified and new techniques for addressing them are developed, AI systems should be updated and revised accordingly.

The Bottom Line

As we wrap up this discussion, it's important to remember that there is no one-size-fits-all solution. Each project and application has its unique requirements and limitations. However, by adopting a holistic approach and using a combination of tools and techniques, we can mitigate bias to a great extent.

Do you want to avoid costly mistakes that come from biased AI? Look no further than our AI development services! At Daffodil, we understand the significance of fairness and accuracy in AI systems and can help you implement robust strategies to achieve unbiased results.