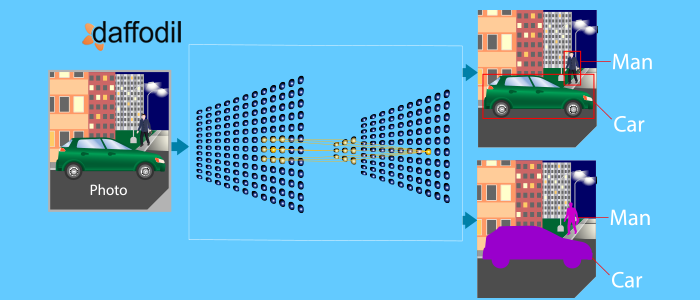

For humans, classifying objects in an image is straightforward, as compared to machines. This process of classifying objects in an image, known as Image Classification involves labeling of images into predefined classes. Since there can be n number of classes into which an image can be classified, manual classification (when there are thousands of images) is difficult for humans too. That is why automating the process of object identification and classification with machines is gaining ground.

To make machines distinguish between the objects and classify them, computer vision, a subfield of Artificial Intelligence is used. Computer Vision is an AI technology that enables digital devices (face detectors, QR Code Scanners) to identify and process objects in videos and images, just like humans do.

The computer vision technology collaborates with Machine Learning (ML) that enables machines to learn and improve from experiences, without explicitly programmed for it. The models thus created using these technologies not only help in classifying images but gradually learns to give more accurate output with time.

There are different ways digital devices can be trained for image classification. Using machine learning with computer vision, several learning models can be created that help the machines to detect objects and classify them. Transfer learning is one of them.

In the later segment, we will discuss the transfer learning technique in detail. We will also discuss a real-time example of its implementation through one of the AI projects and understand the benefit that this model training technique offers.

Image Classification using Transfer Learning Technique

Transfer learning is a timesaving way of building image identification models, introduced by W. Rawat & Z. Wang. With the transfer learning approach, instead of building a learning model from scratch, the model is made to learn from pre-trained models that have been trained on a large data set to solve a problem that is similar to the existing problem.

Consider this. A teacher has years of experience in a particular subject. Through his lectures, he transfers his knowledge to the students. Similar is the case with neural networks.

Neural networks are trained on data. The network (teacher) gains knowledge from the dataset, which is compiled as ‘weights’ of the network. In transfer learning, these weights can be extracted and transferred to other neural networks (students). So, instead of training a neural network from scratch, the learned features are transferred between the networks.

In this case, the developers proceed by removing the original classifier and adds a new classifier that fits the purpose. The model is then fine-tuned by following one of the following strategies:

- Train the model from scratch: In this case, the architecture of the pre-trained model is used and model training is done according to the new dataset. Since the model here learns from scratch, a large dataset and computational power would be required by the model.

- Train a few layers and leave others frozen: The lower layers of the model represent general features (which is generally problem independent) while the higher layers are feature specific (and problem-dependent as well). While the learnings are transferred from one data set to another, some of the layer weights are trained, while some of them remain frozen. Layer freezing, means the layer weights of a trained model remain unchanged when they are reused in a subsequent downstream task.

- Freeze the convolutional base: In this case, the convolutional base is kept in its original form and its output is used to feed the classifier. Here, the pre-trained model is used as a feature extraction mechanism.

How Transfer Learning can be used in Different Scenarios:

Scenario 1: The data set is small but the data similarity is very high

Since the data similarity is high, retraining the model is not required. The model can be repurposed by customizing the output layers as per the problem. Here, the pre-trained model is used as the feature extractor.

Scenario 2: The data set is small and the data similarity is low as well

When this is the case, the idea is to freeze the initial layers (say i) of the pre-trained model and train the remaining (n-i) layers. Since the data similarity is low, it is important to retrain the model and customize the higher layers of the model according to the new data set.

Scenario 3: The data size is large but the data similarity is low

When this is the case, the predictions made by the pre-trained model won’t be effective. Hence, it is recommended to train the neural network from scratch according to the new data.

Scenario 4: Size of data is large and the data similarity is high too

In this case, you have hit the jackpot. The pre-trained model is the most effective one and it would be great to retain the architecture and initial weights of the pre-trained model.

ALSO READ: What is Machine Unlearning?

Using Transfer Learning Technique in Real-Time:

AI development is one of the prime services by Daffodil. Recently, in one of its projects, where Artificial Intelligence was shortlisted for image classification of banknotes, the transfer learning technique was used to train the ML model.

The client, Reserve Bank of India (RBI) wanted to build a mobile app for the visually impaired in India in order to enable them to identify the Indian banknotes. Considering the time constraints and accuracy into consideration, the AI team shortlisted transfer learning as the approach to train the ML model.

For the mobile app, a pre-trained model was selected by the team that was already trained on ImangeNet samples. This model was trained on at least one million images and was hand-annotated to indicate what objects are pictured and then classified them into certain categories. The feature learning from the model is transferred to train the custom banknotes dataset, which is divided into the denomination categories, such as Rs 10, 20, 50, 100, etc. This helped the AI team to achieve accuracy in output in less time. To know more about how ML was used for image classification in this project, check out the full case study here.