The domain of Artificial Learning (AI) known as Deep Learning (DL) is fast gaining acceptance as a go-to technology for a number of use cases. When it comes to use cases where image data makes up most of the input fed to a system, a DL technique known as semantic segmentation offers accurate implementation outcomes.

Semantic image segmentation finds application in fields such as virtual try-on across e-commerce platforms, separating foreground from background, handwriting recognition, etc. with pixel-level precision. Semantic segmentation in video data can provide real-time solutions in areas such as autonomous driving, robotics, natural disaster recovery, etc.

Up ahead, we will take a deep dive into the semantic segmentation domain and look into the various techniques and methods that experts use for its implementation.

What Is Semantic Segmentation?

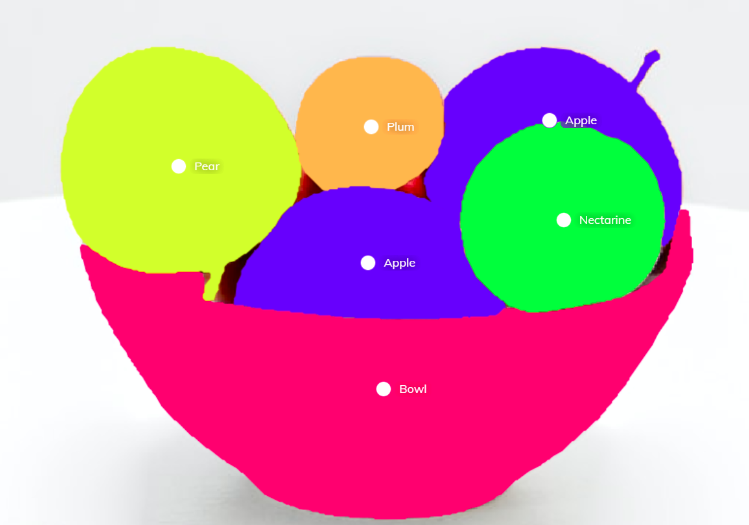

Semantic segmentation is a classification technique of DL that labels each pixel in an image that belongs to a certain class. Different instances of the same object are not differentiated in this type of image classification. It classifies a particular class of the input image.

After classifying the part of the image that contains the object in focus, it is separated from the rest of the image by applying a segmentation mask onto the entire image. The goal of semantic segmentation is fundamentally the conversion of an input image to a segmentation map by converting pixel values to class label values.

Image: Labeling with semantic segmentation

So, semantic segmentation can be broken down into the three following steps:

- Classification: Separating the object to be identified and classifying it as distinct from the rest of the image.

- Localization: After the object is identified, pixels are assigned certain values and a boundary is drawn around the object.

- Segmentation: The pixel values for identifying the object are grouped together and a segmentation mask is prepared which separates the pixels outside the object's boundary.

Customer Success Story: Daffodil helps a geospatial AI firm to map more than 30 cities by training machine learning models.

Deep Learning Methods For Semantic Segmentation

Image parsing using deep learning is done through the extraction of the component features and representations of the image. So, inherently, semantic segmentation facilitates image processing through the removal of noise from the image and deriving meaningful correlations between the pixels of the image. The DL methods that do this in various ways are as follows:

1)Fully Convolutional Network

A non-linear activation function, a pooling layer, and a convolutional layer make up Convolutional Neural Networks (CNN). In the process of semantic segmentation, we seek to extract features before utilizing them to divide the image into various segments. The problem with convolutional networks, though, is that the max-pooling layers cause the image's size to decrease as it moves through the network.

We need to effectively upsample the image using an interpolation method, which is accomplished by employing deconvolutional layers. The convolutional network that is utilized to extract features is referred to as an encoder in standard AI language. While the convolutional network that is used for upsampling is referred to as a decoder, the encoder also downsamples the image.

2)Convolutional Encoder-Decoder

Because of the information lost in the final convolution layer, the 1 X 1 convolutional network, the decoder's output is erratic. By employing very little information, the network finds it exceedingly challenging to perform upsampling. Due to CNN's success, a universal machine learning engine may be developed for use in a variety of computer vision applications.

A technique with good memory management and quick processing speed is required for real-time scene text recognition applications. The researchers developed two architectures, FCN-16 and FCN-8, to overcome this upsampling issue. In FCN-16, segmentation maps are produced by combining the final feature map and data from the preceding pooling layer. Adding data from a further prior pooling layer in FCN-8 aims to improve it even further.

3)U-Net

The development of CNN may lead to the creation of a general machine learning engine for use in a breadth of computer vision applications. Real-time scene text recognition applications need a method with appropriate memory management and quick processing performance. Encoder and decoder blocks are present in the U-net due to their design.

In order to create a U-net design, these encoder blocks communicate their extracted features to the matching decoder blocks. As of now, we know that the size of the image decreases as it passes through convolutional networks. This is due to the fact that it simultaneously max-pools layers, which results in the loss of information.

4)Pyramid Scene Parsing Network

The Pyramid Scene Parsing Network (PSPNet) was created to comprehend the scene completely. Because we are attempting to generate a semantic segmentation for each object in the provided image, scene parsing is challenging. If a network can use the scene's global context information, it can detect spatial similarities.

There are four convolutional parts in the module for pyramid pooling. While the first component, shown in red, divides the feature map into a single bin output, the other three create pooled representations for various locations. The final feature representation is created by concatenating these outputs after they have each been independently upsampled to the same size.

5)Atrous Convolution

In the past few years, several enhancements to fully linked convolution nets for semantic segmentation of fine details have been reexamined. Introducing zero-values into the convolution kernels, dilated convolutions, or atrous convolutions, which were originally defined for wavelet analysis without signal decimation, increases the window size without increasing the number of weights.

A number of convolutions are implemented with the following function in the deep learning framework TensorFlow: tf.nn.atrous_conv2d. Utilizing an atrous or dilated convolution has the advantage of reducing computing costs while capturing more data. Compared to downsampling-upsampling procedures, atrous convolution produces a denser representation.

ALSO READ: AI Center of Excellence: Applications of Image Segmentation in the Real World

Several Industries Rely On Quality Semantic Segmentation

Differentiation of objects in images at a pixel level can help immensely in expediting pathological diagnoses, criminal investigation, and alleviating financial fraud. The right semantic segmentation method, when applied to the appropriate use case, can produce very cost-effective and time-sensitive outcomes across a wide array of industries. If you have a specific requirement for the implementation of AI or DL to enhance your product, you can book a free consultation with us today.