Did you ever realize this? The eCommerce app that you generally shop from offers a seamless experience. But when you shop from the same app during a sale, it crashes very often. This happens because the app server is not prepared to handle a load of concurrent users on the server.

To avoid such performance and availability issues during high traffic times, it is important to test the application for its ability to balance the load of concurrent users. That’s when load balancing comes into the picture.

In this blog post, we will be discussing what is load balancing and why it is important? We will also talk about the significance of performance testing in enabling the DevOps team to efficiently balance the load on the server. Let’s get started.

Load Balancing and its Benefits:

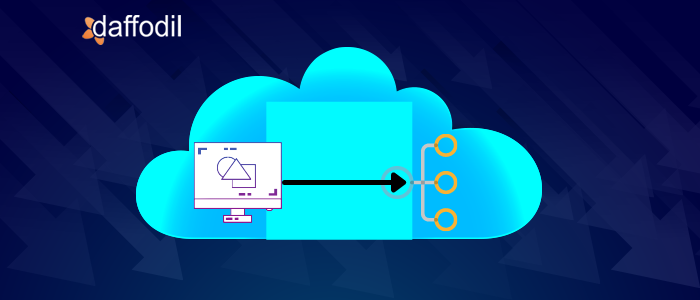

Load balancing is the process of distributing workloads on multiple servers in order to prevent a server to get overload and break down. It ensures that the performance of an application is up to the mark even during peak traffic hours.

When a load of users is within the limits, the application turns to sufficient resources (CPU, RAM, etc.) for the response. In this situation, a timely response is received by the end user’s device and there is a seamless user experience.

On the contrary, when there is a rush of users, the resources on the server fail to perform and respond on time, resulting in performance and availability-related issues.

How load balancing works and why is it needed?

When computers were introduced, no one expected them to work this much. These machines are pushed beyond limits and are piled up with a concurrent load of hundreds and thousands of users onto them. This results in critical performance problems.

Moreover, machines are prone to failures. An entire load on a single machine means a single point of failure which is simply a disastrous situation for any application owner. This runs the application into availability issues.

For disaster management, i.e. ensuring performance and availability of application during high traffic, the idea of duplicating the server is recommended by many. However, rerouting traffic to another machine and burdening is not a problem resolver. Also, it is not an economical solution.

Then, what can be done to ensure that a hike or drop in traffic does not affect the performance of the application? The answer is by scaling out the resources.

Scaling out the resources allows distributing the computational load across different servers so as to handle the workload, as necessary. Apart from distributing the load, it adds or removes the capacity, as needed.

Load Balancing for Software Application: How to Get Started?

Creating a server configuration that manages the peak load is the core job DevOps team at Daffodil, in conjugation with the Quality Analysts.

To test the application performance for concurrent users, load testing for N number of users is performed in a simulated environment. This is done using third-party tools for functional and performance testing.

The report, thus generated helps to understand the areas in application that affect the performance. This report is generally forwarded to the DevOps team who further takes care of the server configuration and ensures that the load on the server is equally distributed to maintain performance during peak traffic hours.

There are several websites or apps (especially from the eCommerce industry) that confront performance issues on receiving concurrent requests on servers. If your application is amongst those confronting a similar issue, connect with our team to get a resolution.

Our team analyzes the solution, scalability, architecture, cost, and other significant factors to suggest the best server configuration for distributing the load. You can connect with our team through our 30-minute free consultation.