Large Language Models (LLMs) have long been at the forefront of artificial intelligence, leveraging vast amounts of textual data to perform tasks ranging from language translation to content generation. These models have laid the foundation for numerous applications, fundamentally transforming the way machines understand and generate human-like text. However, as the landscape of AI continues to evolve, a shift is occurring with the rise of Multimodal LLMs.

Unlike their predecessors, Multimodal LLMs break free from the constraints of text-only understanding. They represent a leap forward in AI capabilities by seamlessly integrating various data inputs, including text, images, audio, and more. This integration allows these models to not only comprehend language intricacies but also interpret and respond to the rich information present in other modalities.

In this blog, we delve into the details of Multimodal LLMs and explore how they are not only shaping the future of AI but also redefining the very essence of human-machine interaction.

Understanding Multimodal LLMs

Multimodal LLMs are a new breed of smart computer programs that can do more than just read and understand text. These models are designed to process different types of information, like images and audio, in addition to words. The technology behind them is quite sophisticated, involving advanced computer algorithms and structures.

These models use complex neural networks that mimic the way our brains work to understand and process information. Think of them as really smart assistants that not only read and understand text like traditional models but can also "see" and "hear" to make sense of images and audio.

1. Integration of Modalities

What makes these models stand out is their ability to bring together information from different sources seamlessly. They can understand pictures, listen to sounds, and still make sense of words – all at the same time.

Visual Modality: These models can "look" at pictures and videos, recognizing objects, scenes, and even describing what's happening in a photo.

Auditory Modality: With the capability to "hear," they can understand spoken words. This means they can transcribe what someone is saying or even respond to voice commands.

Linguistic Modality: While they're great at understanding words, what sets them apart is their ability to use language in context. It's not just about knowing words; it's about understanding the meaning behind them.

Other Modalities: Depending on what the model is designed for, it can also handle other types of information, like data from sensors or touch-based feedback.

2. Advances in Neural Networks and Machine Learning

The development of these models has been possible because of some big improvements in how computers learn and understand things.

Transfer Learning Techniques: It's like the model is learning from one task and applying that knowledge to another. Imagine learning to ride a bike and then using some of those skills to learn how to skateboard – that's what these models do.

Attention Mechanisms: These models are good at paying attention to what's important. Just like when you're trying to understand a conversation in a noisy room, these models focus on what matters most in the information they're processing.

Scaling Architectures: They're also getting bigger – not in physical size, but in the number of things they can learn. The more they learn, the better they become at understanding and handling complex information.

Understanding how these models work gives us a glimpse into the future of computers – where they're not just good at reading and writing but can truly understand and interact with the world in a way that's closer to how we do. This is a big step forward in making technology more helpful and intuitive for us.

Top 15 Industry Applications of Multimodal LLMs

Multimodal LLMs are making substantial contributions across diverse industries, enhancing operational efficiency and strategic decision-making. Let's explore practical applications of these advanced models in real-world business scenarios.

1) E-commerce

Product Recommendations: Multimodal LLMs can analyze both textual product descriptions and images to provide personalized and accurate product recommendations to users. This enhances the user experience and increases the likelihood of conversions.

Visual Search: Users can utilize visual input to search for products. Multimodal LLMs can interpret images and match them with product databases, allowing for a more intuitive and efficient search experience.

User-Generated Content Analysis: LLMs can analyze user-generated content, including reviews with text and images, to provide more nuanced insights into product satisfaction and potential areas for improvement.

2) Healthcare

Medical Imaging Analysis: Multimodal LLMs can assist in diagnosing and interpreting medical images, such as X-rays and MRIs, by combining visual understanding with knowledge from textual medical records. This can improve diagnostic accuracy and speed up the decision-making process for healthcare professionals.

Clinical Report Generation: LLMs can generate comprehensive and coherent clinical reports by assimilating information from both text-based patient records and medical images.

Health Monitoring with Wearables: LLMs can analyze both textual health data (such as patient records) and visual data (from wearables like smartwatches with cameras) to provide a comprehensive overview of an individual's health status.

3) Education

Interactive Learning Materials: Multimodal LLMs can create interactive and engaging educational materials by combining textual content with relevant images and diagrams. This aids in improving understanding and retention among students.

Automated Grading: LLMs can evaluate and provide feedback on assignments by considering both text and visual elements, offering a more holistic assessment of student work.

4) Autonomous Vehicles

Context-Aware Navigation: Multimodal LLMs can assist autonomous vehicles in understanding and responding to textual and visual cues. This includes processing road signs, interpreting traffic conditions from images, and integrating this information for safer and more efficient navigation.

Enhanced Driver Assistance Systems: LLMs can provide real-time assistance to drivers by analyzing both text and visual data, contributing to features like lane-keeping assistance and collision avoidance.

5) Finance

Fraud Detection: LLMs can play a crucial role in fraud detection by analyzing textual transaction data and associated visual information. They can identify unusual patterns or discrepancies that may indicate fraudulent activities, contributing to more robust security measures in financial transactions.

Market Research: Multimodal LLMs can analyze textual financial reports, market analyses, and visual data to generate comprehensive insights for market research. This can aid financial analysts in understanding market trends and making data-driven predictions.

Personal Finance and Advisory Services: LLMs can be employed in personal finance applications to provide advice and insights to users by analyzing both textual financial data (such as budget information) and relevant visual elements. This can help individuals make more informed financial decisions.

6) Travel

Multimodal Trip Planning: LLMs can assist travelers in planning trips by analyzing both textual preferences and visual cues. This could include personalized recommendations for destinations, accommodations, and activities based on individual preferences.

Augmented Reality Travel Guides: LLMs can contribute to augmented reality travel guides that overlay textual and visual information onto the real-world environment, enhancing the exploration experience for tourists.

Benefits of Multimodal LLMs Over Unimodal Systems

Opting for multimodal LLMs over traditional unimodal systems yields several strategic benefits in the business landscape.

Comprehensive Understanding: Multimodal LLMs provide a holistic grasp of information, simultaneously interpreting various data types. This nuanced understanding enhances market intelligence and customer insights, fostering a deeper understanding of business dynamics.

Enhanced Decision-Making: Industries reliant on data-driven decisions, such as healthcare and automotive, benefit from the amalgamation of written and visual information. Multimodal LLMs contribute to more informed and sophisticated decision-making processes, enabling organizations to navigate complex challenges with agility.

Elevated User Experience: In customer engagement and content delivery, multimodal LLMs enhance the user experience. By considering both linguistic and visual elements, businesses can tailor interactions to be more engaging and personalized, fostering stronger connections with their audience and boosting customer satisfaction.

Operational Efficiency: Multimodal LLMs streamline processes, reducing the time and resources required for tasks. In healthcare, for instance, faster diagnostics lead to more efficient patient care. In education, streamlined assessment processes contribute to improved learning outcomes.

Some Examples of Multimodal LLMs

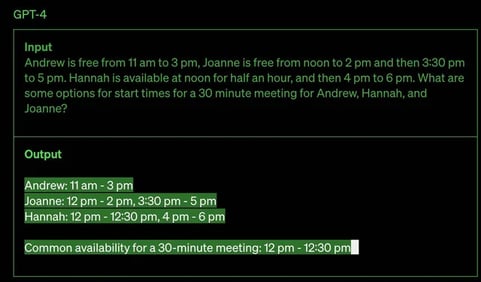

1) Open AI’s GPT-4

The recently launched GPT-4 by OpenAI exemplifies the capabilities of LLMs. While GPT-4 may not achieve human proficiency in every real-world scenario, it has consistently demonstrated human-level performance across various professional and academic benchmarks.

Image source: OpenAI

2) PaLM-E

PaLM-E represents a novel robotics model developed collaboratively by researchers from Google and TU Berlin. This innovative model leverages knowledge transfer from diverse visual and language domains to enhance the learning capabilities of robots. In contrast to previous approaches, PaLM-E uniquely trains the language model to assimilate raw sensor data directly from the robotic agent. This approach yields an exceptionally efficient robot learning model, establishing itself as a cutting-edge, general-purpose visual-language model.

3) OpenAI's DALL-E

DALL-E is an AI model that generates images from textual descriptions. It extends the GPT-3 model to include image generation capabilities. By providing a textual prompt to DALL-E, it can create a wide variety of images that match the description, even if the description is highly imaginative or complex. For example, if you ask for "an armchair in the shape of an avocado," DALL-E can create a corresponding image that reflects this unique concept.

3) Microsoft: Kosmos-1

This MLLM is designed with the capability to comprehend various modalities, learn in context (few-shot learning), and execute instructions (zero-shot learning). Trained entirely from the ground up using web data, which encompasses both text and images, as well as image-caption pairs and text data.

Kosmos-1 exhibits impressive performance across a spectrum of language-related tasks, including understanding, generation, perception-language tasks, and vision-related activities. With native support for language, perception-language, and vision functions, Kosmos-1 excels in handling tasks that involve both perception-intensive and natural language elements.

ALSO READ: Multimodal AI Explained: Major Applications across 5 Different Industries

Harnessing AI for Smarter Business Outcomes

As we've seen, these models are not just adept at understanding language but also proficient in interpreting diverse types of information, from text to images and even audio. This versatility broadens the scope of possibilities for AI applications, promising a more enriched user experience.

Looking forward, the potential impact is considerable. Improved interactions between humans and machines, interfaces that feel more natural, and innovations in creative domains signal a shift towards a more integrated role for AI in our daily lives. Yet, as we embrace this future, it's crucial to tread carefully and consider the ethical dimensions at play.

The ethical considerations surrounding Multimodal LLMs, such as the potential for bias and concerns about privacy, necessitate ongoing vigilance. Balancing the drive for innovation with responsible development becomes paramount to ensure that these technologies contribute positively to our societal progress.